Roundup #5: The AI Epoch

Thoughts on the New AI Epoch; An idea maze for LLMs; Punctuated Equilibrium; The fate of Google; The BuffettBot Experiment; Space 2022 in 4 photos

Hello again! These are my latest thoughts on the areas I’m interested in. I hope you’ll enjoy learning more.

In this roundup:

🤖 A.I.

Essay: Thoughts on the AI epoch — An idea maze for LLMs; Punctuated Equilibrium; The AI revolution; Where’s the moat?; The fate of Google.

My thoughts on AI (as a podcast!)

The BuffettBot Experiment

🚀 Space — 4 photos and a link to summarize 2022.

🔗 Interesting Links — Other takes on AI; Derek Thompson essays; and Choosing Good Quests.

🤖 A.I.

Thoughts on the AI epoch

What more can be said about the AI boom that began its ascent less than a year ago? A lot! The potential of AI is immense and its influence on our lives is sure to be significant. And so I’ll continue. . .

In this mini-essay I’ll focus more on Large Language Models (LLMs), but my thoughts apply to all other AI efforts as well.

An easy way to think of it is that LLMs will soon become the “autocomplete for everything”:

What’s common to all of these visions is something we call the “sandwich” workflow. This is a three-step process. First, a human has a creative impulse, and gives the AI a prompt. The AI then generates a menu of options. The human then chooses an option, edits it, and adds any touches they like.

. . . So that’s our prediction for the near-term future of generative AI – not something that replaces humans, but something that gives them superpowers. A proverbial bicycle for the mind. Adjusting to those new superpowers will be a long, difficult trial-and-error process for both workers and companies, but as with the advent of machine tools and robots and word processors, we suspect that the final outcome will be better for most human workers than what currently exists.

There seems to be an unlimited number of areas that language prompting + completion will enable. Some are obvious: a new iteration of Google, help with writing, content generation, help with marketing copy, etc. You see many startups and tools that have already sprung up to tackle these.

Some of the real interesting applications that are incubating now will have action models as a big component. Models will have the ability to take actions like: searching the web; ordering an item; making a reservation; using a calculator; or using any other digital tool that humans are capable of using. Imagine ChatGPT being able to confirm its answers with multiple sources, or having access to all your personal records it can use to assist you.

Prompt engineers are already discovering how much you can do with the existing models, without any new advancements or manipulation of the actual base model. Even if GPT-4 or an open-source LLM from Stability.ai take years to come out, the existing tools are enough for huge changes.

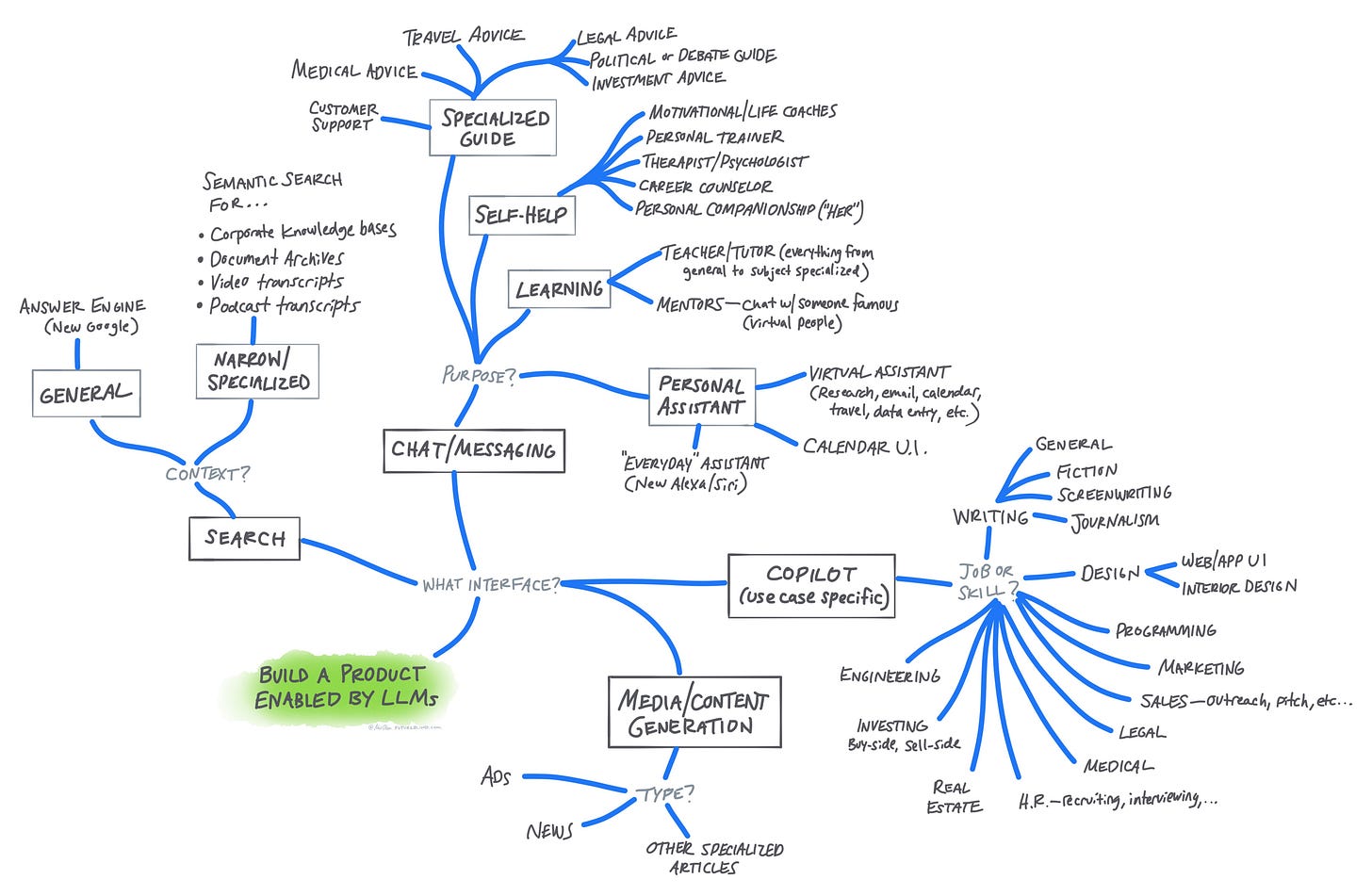

An idea maze for LLMs

The above is an idea maze I sketched out for products enabled by LLMs. The key question it starts with is “What kind of interface would the use have with the product?”

Search — you search for something just like you do on Google, but the results are much more relevant to what you’re truly looking for.

Chat — you’re having a conversation with the AI, and as part of that back-and-forth communication it helps you accomplish some goal.

Copilot — the AI “sits beside you” in whatever you’re working on, assisting where it can and giving whatever you’d normally do a big productivity boost. The actual interface in this case would be highly specific to the use case.

Content generation — you give the AI some minor guidance upfront, and it generates the entire content to use (this might be considered a Copilot interface).

[Games] — similar to chat, but you’re not necessarily having a conversation. The AI is leading you or enabling some sort of game-like interface for entertainment, learning, or problem solving.

A few highlighted examples:

Personal assistant — Imagine Siri or Alexa if they actually had some serious capabilities. You say “My wife and I want to take a trip to Europe this summer,” and it uses travel booking APIs and TripAdvisor to create a handful of itineraries, their prices, and best aspects of each. You pick one, and it makes all the reservations for you. It follows up with reminders and suggestions on what to pack.

Legal copilot — You’re a lawyer at a law firm. A LegalAI tool you use knows every law in existence, along with every prior case and their outcomes. You use it to pull out the most important points of a 200 page contract, and summarize it for your client. You use it to draft an agreement by listing the most important considerations, and it suggests points you may have forgot. Most impressively, you’re representing your client in a corporate fraud case and after ingesting all the relevant details, LegalAI suggests ways to win the case, including esoteric legal loopholes and creative arguments to sway the judge. The digital version of Extraordinary Attorney Woo.

Personal tutor — You’re a teacher or parent of a child in 5th grade. They’re struggling and falling behind in math. You talk to an AI tutor service first to provide an overview of the situation, just like you would a real tutor. The child speaks with the AI, and it finds out more about what the know and how they learn best. It does daily sessions with them over the course of a few months, and they are now caught up with the rest of the students. Kids who would have never been able to afford a private tutor in the past now have the most knowledgeable tutors in the world. They still can’t fully replicate in-person tutors, but for most kids this doesn’t matter.

Punctuated equilibrium: Welcome to the AI revolution

The study of ecology can provide many good analogies to the world of business and technology. Both are complex adaptive systems, with structures and behaviors emerging over time as they evolve. Both have building blocks that combine into many variations as they adapt to fit their changing environment.

One of these models is punctuated equilibrium.

Punctuated equilibrium is the theory that species evolve at a slow, steady rate for long periods of time, with occasional, rapid changes taking place over short periods of time. The idea is that evolution progresses in a series of short bursts of rapid change, followed by long periods of stasis. These changes play out more like S-curves or step-functions then smooth linear or exponential growth.

It’s very clear that the current AI epoch is punctuation event in the history of technology and business — a rapid evolutionary change during which the world evolves quickly in response to changing environmental conditions.

It’s on the level of other computing revolutions like smartphones, the internet, and personal computers.

Microprocessors and other computer hardware led to cheap PCs in the hands of businesses and individuals. This allowed them to use software, the real general purpose productivity booster. Software allowed businesses to automate and streamline many of their operations, and individuals to do things they never thought possible before.

But software can be clunky. It still takes a lot of effort and maintenance to solve problems using software, and it is really bad at tasks that involve reasoning, learning from data or making decisions.

Enter AI models — Software 2.0:

Software 1.0 is code we write. Software 2.0 is code written by the optimization based on an evaluation criterion (such as “classify this training data correctly”). It is likely that any setting where the program is not obvious but one can repeatedly evaluate the performance of it (e.g. — did you classify some images correctly? do you win games of Go?) will be subject to this transition, because the optimization can find much better code than what a human can write.

AI can take in data, learn from it and make decisions — just like humans can. This means it can automate tasks that are difficult or impossible to do with software alone. AI is opening up new possibilities and opportunities that weren’t available before.

I’ve seen some comparisons, both negative and positive, to crypto/web3 hype in 2018 or 2020. The comparison isn’t apt to me at all. Here’s a few reasons why:

With the internet or crypto, you needed other people to be involved to make it more useful. The current crop of AI is useful out of the box and doesn’t need network effects, similar to PCs. (Although in both cases it still helps to have more people using in the long run.)

Web3 is still a solution looking for a problem. It will have legitimate uses (you can make a good argument that AI will actually drive those uses in some cases), but there are much clearer products or features that can have immediate value to users for AI. See my experiment with BuffettBot in the section below — I built this in a week and within a day thousands of people got immediate use from it!

NZS Capital’s Brad Slingerlend phrased the potential effects well:

Could we see a doubling of productivity across nearly every information-based job? It’s such early days, yet the results are so promising, that I am willing to venture into the extremely dangerous territory of making predictions – and declare that we just might see massive productivity increases from chatbots and generative AI unlike anything we have yet seen over the course of the Information Age – outweighing even PCs, smartphones, and the Internet. I hate the expression “buckle up”, but it might be called for here.

Where’s the moat?

Continuing the comparison to past computing revolutions: I believe it’s likely that, relative to the internet or PCs, this epoch of AI may be less disruptive to incumbents and more productivity-boosting to humanity.

It doesn’t have built-in network effects or economies of scale like the PC app ecosystem or the internet. Nevertheless, I think competitive advantage can be strong depending on the area:

Technical — In some niche areas, model structure and implementation may give an advantage for long enough to put the product in some other defensible flywheel. They don’t even need to be better quality either. Making models faster, cheaper to run, or available offline can give distinct advantages in some areas.

Learning curves & data network effects — If model uses Reinforcement Learning from Human Feedback (RLHF), the more people that use it, the better the model becomes. The more niche the use case, the more important this becomes.

Switching costs — Many will have strong switching costs due to: (1) tight integration with workflow, (2) personal attachment (like a personal assistant), or (3) regular/habitual usage associated with interface or brand.

The last point about regular usage associated with an interface may be key:

Any company that can control the user's main interface will win.

Scott Belsky, the CPO of Adobe, has a great theory about this:

Companies that successfully aggregate multiple services in a single interface have a chance of really shaking up industries. As soon as you rely on one interface for a suite of needs, you become loyal to the interface rather than the individual services it provides.

Where will users interact with the AI model? If it’s easier for people to use it through an existing, known interface, it’s much easier for incumbents to adapt and add AI as a feature. Startups had an advantage in the internet era because the interface was completely new in most cases. It was disruptive (in a Christensen sense) to their business models.

It’s clear that AI writing assistants will be big. But who will win here? If Microsoft Word, Notion, and other existing apps can integrate AI properly within some reasonable time, most users would rather stay then move to a new, unfamiliar interface. AI seems more like a sustaining innovation in this case.

Which brings us to the big one: Google.

If you ask the same question about Google, the next question becomes “how long will it take for Google to integrate LLMs into their main search UI?” And if in the next few years they can do this just enough that people don’t go elsewhere for general-purpose queries, then users will stay.

The question after that is what it will do to Google’s business model. Is it still sustaining? Possibly. Even if so, it could make it not as financially lucrative.

Liberty shared some good thoughts on how Google will be impacted here [paid]. A good scenario may be that their gross margins go down a bit as search costs increase.

Just before publishing this, Ben Thompson of Stratechery wrote a great essay that essentially aligned with my thoughts above. The most relevant paragraph:

What is notable about this history is that the supposition I stated above isn’t quite right; disruptive innovations do consistently come from new entrants in a market, but those new entrants aren’t necessarily startups: some of the biggest winners in previous tech epochs have been existing companies leveraging their current business to move into a new space. At the same time, the other tenets of Christensen’s theory hold: Microsoft struggled with mobile because it was disruptive, but SaaS was ultimately sustaining because its business model was already aligned.

My thoughts on AI (as a podcast!)

A few months ago I had a discussion on my friend Eric Jorgenson’s podcast. We intended to talk about a variety of topics, but ended up discussing mostly AI.

With how fast progress has been moving recently, two months ago is like years, so some of the content may already be out of date. Some topics we discuss:

What is computer vision? How does a neural network work? How we’re making the world legible to computers. What will AI be capable of? How will Google fare in this new paradigm? Can computers smell?

#051 AI Evolution: ComputerVision, Olfactory Computation, and Neural Nets with Max Olson

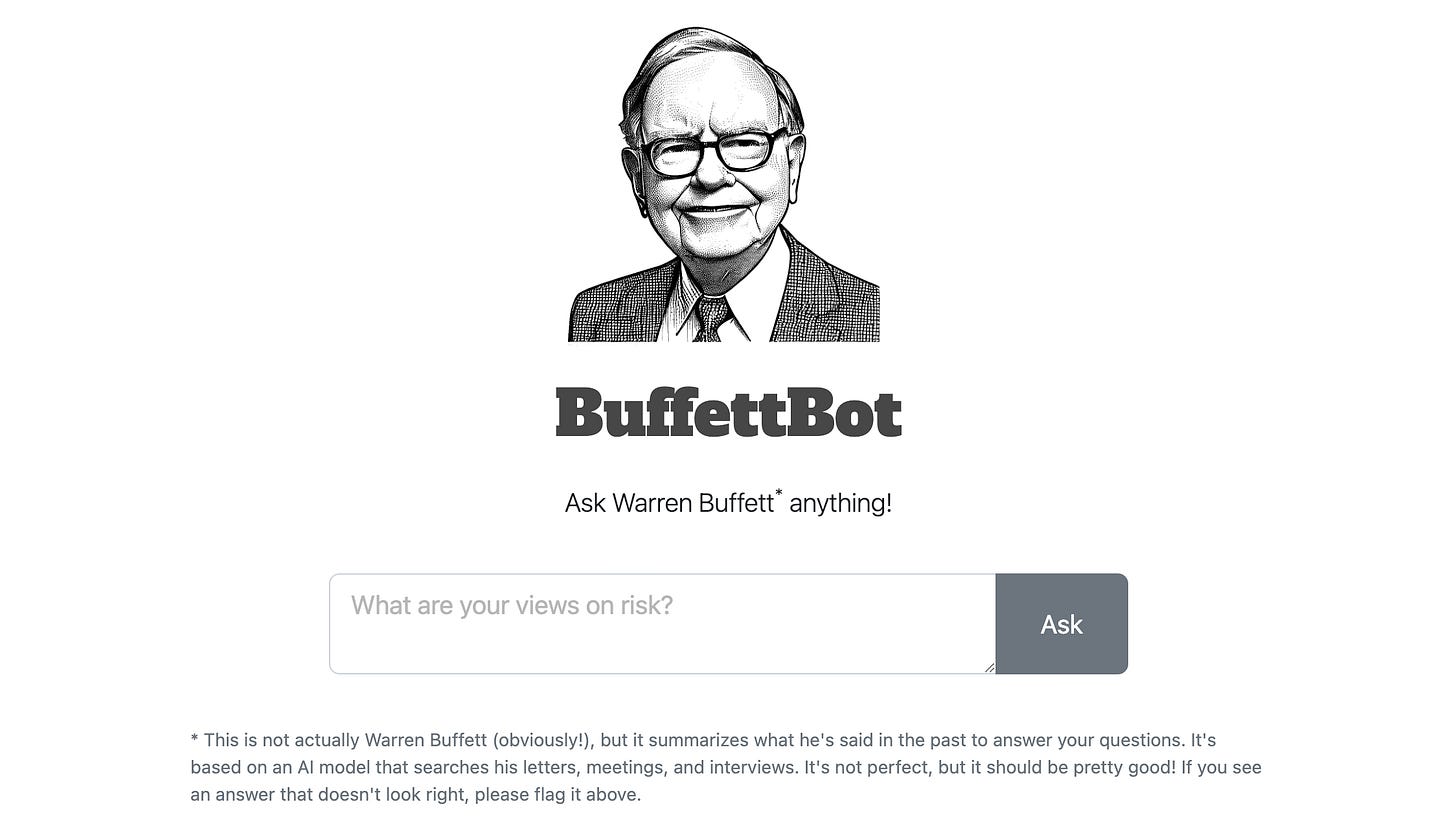

The BuffettBot experiment

On December 14, I launched BuffettBot.com.

BuffettBot uses the OpenAI API to search an archive of his writings and ask “Warren Buffett” questions. It even connects the answer to quotes and sources it pulled the information from.

Unfortunately the service only ran for 3 days before I had to FLIP THE KILL SWITCH.

No, BuffettBot didn’t become sentient and threaten to allocate the world’s resources. The usage just exploded and I couldn’t justify keeping it running given the daily cost of using the API.

Ultimately even though I had to shut it down for now, this was a successful experiment.

What’s the future of BuffettBot?

Given the cost to run it in the current form, I’m not sure what the future holds. I don’t think the best model for something like this is pay-per-use. (Although a platform with many of these bots may justify something like “charge $10 to ask 100 questions”.)

A version that’s much more cost efficient and still very valuable is something like the following:

“Buffett Archive” where you can semantically search all of Warren Buffett’s writings and interviews. Semantic search means you’re not doing a

⌘Fand looking for an exact phrase, you’re looking for meaning in the text. So you could search “how did GEICO’s float change in 1995” or “the Washington Post’s moat” and get the most relevant results. The app will allow you to browse all the results, showing them in context of the letter/transcript/etc. that they were found.

Let me know if you’d be interested in something like this! Given the popularity of BuffettBot, I think this would be pretty valuable. And a “Munger Archive” as well.

🚀 Space

Although AI progress in 2022 seemed like the main show, we made some pretty big leaps forward in space.

The past year can be best summarized in 4 photos:

For a more detailed summary of what went down in space last year, Orbital Index gave a great rundown here.

🔗 Interesting Links

Other good AI takes I’m fond of:

Before the flood, by Samuel Hammond

AI from Superintelligence to ChatGPT, by Séb Krier (Works in Progress)

The Age of Industrialized AI, by Daniel Jeffries

Superhistory, Not Superintelligence, by Venkatesh Rao

Derek Thompson has written a few good essays recently:

Why America Doesn’t Build What It Invents — The U.S. just made a breakthrough in nuclear-fusion technology. Will we know how to use it?

Why the Age of American Progress Ended — Invention alone can’t change the world; what matters is what happens next.

Your Creativity Won’t Save Your Job from AI — Robots were once considered capable only of unimaginative, routine work. Today they write articles and create award-winning art.

Unblocking Abundance, by Sarah Constantin — What can we do to remove to barriers to progress?

Choose Good Quests, by Trae Stephens and Markie Wagner — Silicon Valley's current focus on easy money has resulted in a failure to solve big problems. There is a moral imperative for our best players to choose good, hard quests, which will make the future better than the world today.