Roundup #6: Design & the future of generative AI

The Grand Unifying Theory of Design; my rant to AI skeptics; AI design gets innovative; LLM agents are the future; Starship & the next 10 years of space

Hey everyone!

Time for a new roundup. Some housekeeping first:

I’ve now consolidated futureblind.com, my blog archive, and all past newsletter subscriptions onto Substack. So if you’re seeing this newsletter for the first time, glad to have you!

It was a pain dealing with posts in multiple locations, and the previous FutureBlind host charged too much for such a basic set of features and lack of footnote capability (sooo annoying). Substack has been convenient and easy to work with the past 3 years, and continues to deploy more writer-friendly features. As of 4 months ago, FutureBlind now has a paid tier, but — spoiler alert — there’s nothing special for paid members yet. I may end up putting only unpolished, brain-dump-style thoughts on the paid edition. Pay to remove my media filter. Oh and they also have Twitter Notes now — check it out!

Onto the show.

In this roundup:

🎨 Essay: The Grand Unifying Theory of Design — Design isn’t just about how something looks. It’s a universal problem-solving process that can be applied to any discipline to create valuable and delightful experiences.

🤖 A.I. Frontier

Essay: Addressing any remaining LLM skepticism — Is there anyone still skeptical of what existing LLMs will be capable of?

New interfaces of generative AI — What comes after chatbots? How else can we collaborate with AI?

How will generative AI help us tell better stories? — Cheaper AND better quality from stories to post-production.

Agents are the future — Are goal-seeking LLM agents the future of productivity?

🚀 Space — Go time for Starship; Eric Berger summarizes the next 10 years in space.

🔗 Interesting Links — Glotz builds Starbucks; Is tradition true?; Availability cascades; How ChatGPT works; Making computers that can smell; and more.

🎨 The Grand Unifying Theory of Design

Design is creating something that effectively solves a problem in a specific situation.

That’s not how most think of design though. People generally associate it with visual aesthetics or how something looks. For some there’s an aura of mysticism around it, as if it's an elusive art form that only a few can master. This narrow view of design misses how universal the core principles are.

There are a lot of design sub-disciplines. But if you think about big picture “Design”, it’s really about making it so something solves a problem well. In this way, design encompasses basically anything you do that provides value to others. A well-designed experience solves a problem and makes people feel delighted in the process.

Everything you do can benefit from understanding the fundamentals of design.

Continue reading:

🤖 A.I. Frontier

“When I think about generative design, I think about an exploration toward an optimal solution in context. Meaning that the thing that automation never captures is a value system — no software is ever gonna fully capture what all the people involved in that project want.” Anthony Hauck (founder of Hypar) in an interview with Brian Potter

A few weeks ago I wrote a short essay that addresses anyone that may be still skeptical of what existing LLMs will be capable of. Check it out:

New user interfaces of generative AI

Chat and prompting interfaces will clearly be a common way to interact with generative AI models going forward. But what about other ways to collaborate with these models?

I suspect everyone is defaulting to chat UIs because it’s the easiest and most obvious thing to make. Like command line interfaces (C:>) before graphical user interfaces. Or when motion picture cameras were invented and, lacking the proper grammar, they just filmed stage plays.

We’re still in the nascent days, but I’m starting to see some good experimentation:

Recently released beta for Adobe Firefly starts to get at some of these, but it looks like they still use text prompting for a lot of manipulation.

Github Copilot Code Brushes — Apply “brushes” (really just language model transformations) to your code to do things like make it more readable, fix bugs, etc. Github Copilot itself was an innovative interaction UI, only appearing in context as you write code. Although in my personal experience I think they could do a better job allowing users to explore the possibility space more.

Many use cases of generative AI models are essentially just tools to help explore high-dimensional possibility space. (I hope to have an essay in the near future about this.) Linus Lee has been one of the best writers about this area:

Large language models represent a fundamentally new capability computers have: computers can now understand natural language at a human-or-better level. The cost to do this will get cheaper over time, and the speed and scale at which we can do it will go up quickly. When we imagine software interfaces to harness this language capability for building tools and games, we should ask not “what can we do with a program that completes my sentences?” but “what should a computer that understands language do for us?”

Here he is on AI-augmented creation:

I was talking earlier today about how you can view a creation process not as additive (starting with a sentence, and then adding another and another) but as iterated refinement and filtering through an infinite option space (there are an infinite continuations of your first sentence. How do you choose the right continuation?) Because LLMs can explicitly compute all possible continuations, this is an interesting way to look at writing with an AI, and AI-augmented creation in general.

Linus now works for Notion, which has already done a lot of interesting experimentation with language UIs, and I’m excited to see what they come up with next.

Sudowrite is working on innovative writing interfaces, particularly for storytelling and fiction.

Geoffrey Litt writes a long and fascinating essay on his speculations of how LLM interfaces will progress. Highly recommended if you have any interest in the future of product design.

As Alan Kay wrote in 1984: “We now want to edit our tools as we have previously edited our documents.” [. . .] I think it’s important to notice that chatbots are frustrating for two distinct reasons. First, it’s annoying when the chatbot is narrow in its capabilities (looking at you Siri) and can’t do the thing you want it to do. But more fundamentally than that, chat is an essentially limited interaction mode, regardless of the quality of the bot. [. . .] When we use a good tool—a hammer, a paintbrush, a pair of skis, or a car steering wheel—we become one with the tool in a subconscious way. We can enter a flow state, apply muscle memory, achieve fine control, and maybe even produce creative or artistic output. Chat will never feel like driving a car, no matter how good the bot is.

Agents are the future

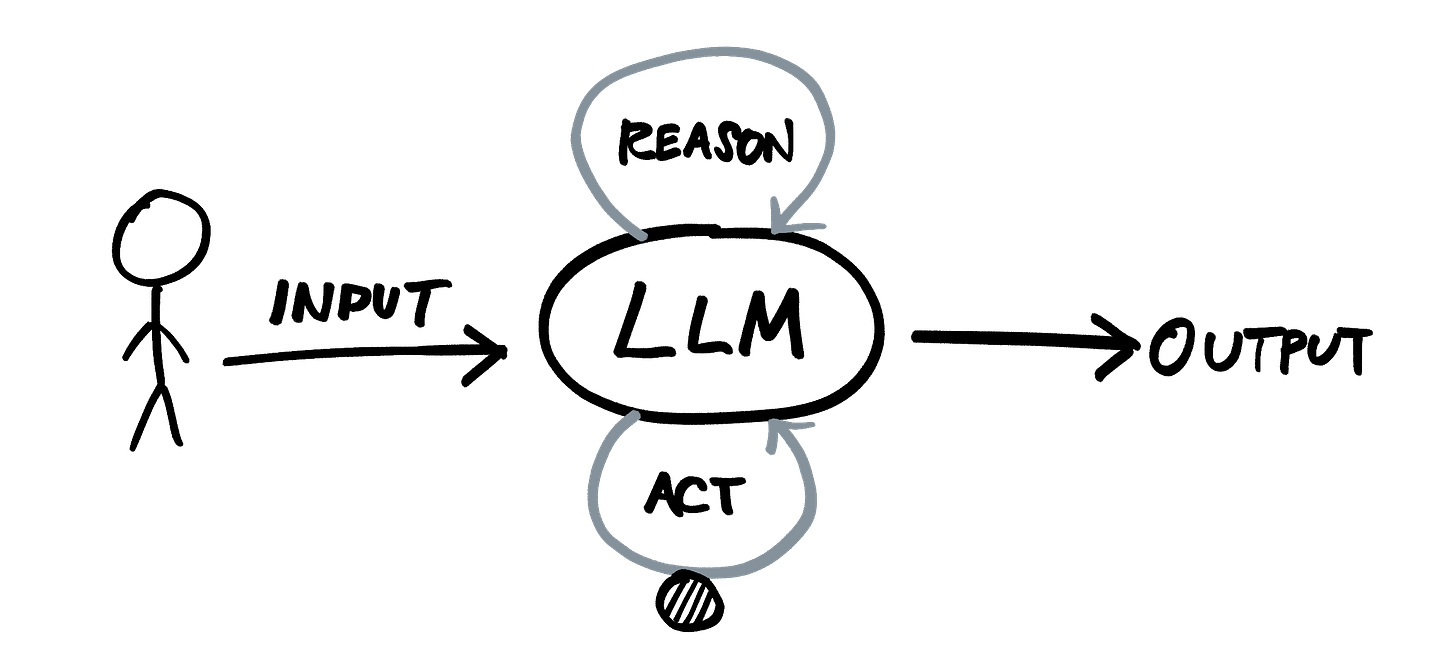

As people rapidly experiment with what language models are capable of, new patterns and abstractions emerge that enable more sophisticated and versatile applications. Agents are one of these patterns.

An agent carries out a specific purpose using reasoning from a language model and whatever tools it’s been given to do the job.

“Programmers will command armies of software agents to build increasingly complex software in insane record times. Non-programmers will also be able to use these agents to get software tasks done. Everyone in the world will be at least John Carmack-level software capable.” — Amjad Masad on Twitter

The ReAct pattern proposed in this paper last year is the most basic example: to answer the original prompt, the model Reasons through chain-of-thought and is given tools it can use to take whatever Actions necessary.

An agent is analogous to a function in programming, but unlike a function its outcome isn’t deterministic. It has goal-seeking behavior and is essentially searching a landscape of possibilities, the terrain of which is defined by the prompt input.

When you combine multiple agents with other capabilities like shared memory management you can create some very interesting patterns, a few of which have been heavily buzzed about the past few weeks:

Auto-GPT — An autonomous agent that recursively creates tasks and seeks out a goal. Here it is solving a coding problem. Someone put up a basic UI around it here.

BabyAGI (Task-Driven Autonomous Agent) — A simple task management system that breaks a goal down into tasks, working on them 1 by 1.

The agent pattern is multi-scale, meaning you can have an agent that uses other agents, and so on. An agent can be very generalized like AutoGPT, which tries to solve whatever problem you give it using other agents in the process. Or it could be more specialized, like a data agent that does nothing but query a database until it finds an answer.

“1 GPT call is a bit like 1 thought. Stringing them together in loops creates agents that can perceive, think, and act, their goals defined in English in prompts. For feedback / learning, one path is to have a ‘reflect’ phase that evaluates outcomes, saves rollouts to memory, loads them to prompts to few-shot on them. That is the "meta-learning" few-shot path. You can "learn" on whatever you manage to cram into the context window.” — Andrej Karpathy

I’ll leave it there for now — but this is a super interesting area to follow. I’ve been experimenting with this myself, both for personal use and on internal company projects, and I’ll likely write more about it later.

How will generative AI help us tell better stories?

“If you can generate an original screenplay with vivid details, characters and lines using ChatGPT (you can today), anyone’s voice using just 15 seconds of recording (available today), and can generate original video using text-to-video prompts (within months we’ll see models with indistinguishable video generation capabilities), you can generate a spin-off or sequel on your own to any mainstream TV show or movie.” — Scott Belsky, CPO of Adobe

The ability to create quality media at a low cost will continue to get better. Generative AI can be used at every level of the media-creation stack — from story generation to final touches in post-production. This is another area I’m closely following and am very excited about.

The potential is nearly endless. What company will be the Industrial Light & Magic of this new era?

If you’re as interested as I am, check out the following:

Runway.ml — A creative tool suite with generative AI at its core.

More innovation from Corridor Crew: 📹 Did We Just Change Animation Forever?

“Idea Olympics” by Packy McCormick — Packy wrote this sci-fi story with the help of ChatGPT and Midjourney. The model was a true collaborator, riffing off of ideas, writing words, and providing inspiration.

🚀 Space

Go time for Starship! You’re not going to want to miss this launch…

SpaceX has completed the final “flight readiness review” for the Starship launch system, with a targeted test flight set for April 17, pending regulatory approval. Soon they’ll perform a launch rehearsal to increase confidence in the fueling process.

During the actual test, the Super Heavy rocket will separate from the upper stage and make a controlled descent into the Gulf of Mexico, while the Starship upper stage aims to reach orbital velocity before reentering the atmosphere over the Pacific Ocean and landing vertically north of Kauai. The test flight’s primary goal is to assess the rockets, their engines, and the system’s capability to reenter Earth’s atmosphere and make a controlled landing.

Let’s hope the FAA gives them clearance so we can see a launch in the next few weeks.

👉 Follow Everyday Astronaut’s Starship orbital checklist to keep up

Why is Starship such a big deal? See my post “The Future of Space: Part I”

The next 10 years in space

From the launch of the James Webb Space Telescope to the rise of SpaceX, the past ten years have marked a period of dramatic change and growth in the space industry. With the continual development of new technology and exploration initiatives, the industry has seen incredible advances, yet also plenty of controversy, cost overruns, and delays. As we move into the 2020s, the industry is at a pivotal point, with the potential to make major breakthroughs in exploration, transportation, and more.

Back in January, Eric Berger wrote about what he’ll be watching for over the next 10 years:

Commercial space stations — Focus turns to finding a future destination for NASA astronauts in low-Earth orbit as the International Space Station nears the end of its lifespan. NASA has awarded funding to various companies, including Axiom Space, Blue Origin, Nanoracks, and Northrop Grumman, for the development of commercial space stations, with the hope of avoiding a gap in low-Earth orbit presence.

When the stations become operational, the space agency will likely be willing to spend about $1 billion procuring commercial station services annually. But will any private stations be ready to go when NASA is ready to buy? This great space station race is one to watch during the next decade.

NASA’s Artemis program — The Artemis I mission successfully demonstrated NASA’s potential for future lunar missions, but the program faces technical and financial challenges. As NASA progresses with Artemis II and III, it must navigate setbacks and competition from China’s ambitious space program.

This is a huge financial, management, and technical challenge, and quite frankly, it’s not 100 percent clear that the space agency and its contractors are up to the task. [. . .] Watching how all of this plays out—how NASA handles delays, setbacks, and international competition—will be one of the compelling storylines of this decade.

Decluttering low-Earth orbit — The number of satellites launched annually has significantly increased, raising concerns about space junk and orbital sustainability. Addressing this complex issue requires careful legislation and cooperation among space powers to prevent potential tragedies in low-Earth orbit.

Is commercial space sustainable? Post-SpaceX, the commercial space industry faces financial and technical challenges, raising concerns for its viability. Companies like Blue Origin and Axiom Space must secure funding and overcome technical hurdles to achieve profitability and meet their goals.

Will Starship actually work? SpaceX’s ambitious Starship program aims to revolutionize spaceflight with reusable rockets and the ability to carry larger payloads at a lower cost. While many challenges lie ahead, a successful Starship would disrupt the global launch industry and significantly alter the future of space exploration and commerce.

🔗 Interesting Links

From the archives ~ When transferring FutureBlind to Substack, I found a few still-relevant posts you might find interesting:

Glotz Builds Starbucks (2012) — A riff of Charlie Munger’s essay on Glotz building Coca-Cola. It’s 1980: You’ve just inherited Starbucks, a small Seattle business that roasts and sells coffee beans in 4 locations. How would you build it into a company worth more than $30 billion in 30 years?

Is the Internet Ruining Media? Hardly. (2008) — I guess not much has changed in 15 years when it comes to our fears of technology replacing what we love.

Quality Without Compromise (2007) — See’s Candies, Warren Buffett, and the perfect investment.

Other great content I’ve enjoyed over the past 3 months:

Erik Torenberg: “Tradition is Truer than Truth” — Just as in biological evolution, traditions are selected for over time as they compete for survival. But are they true? Can they be proven? Sometimes it might not matter. If a tradition or rule-of-thumb has been around for a long time, it’s probably useful — even if we don’t know the use.

Morgan Housel: “How It All Works (A Few Short Stories)” — A few short stories with good lessons: Everyone has different tastes, but emotions are universal; People love familiarity; Everything is for sale; Consistency beats intelligence; and more.

Marc Andreessen: “On Availability Cascades” — Availability cascades occur when a social cascade is triggered by an available topic, regardless of its importance, leading to widespread public discourse. They are driven by availability entrepreneurs, who seek to advance their own agendas by triggering cascades around specific problems or topics.

Wired: “This Startup is Using AI to Unearth New Smells” — On Osmo, a new startup with a vision of allowing computers to smell. This is much much harder to do than computer vision, but once you give computers the power to smell it could be even more consequential than allowing them to see.

Gary Sheng: “Meme Lords Deserve More Respect” and “The Case for Honest, Participatory Propaganda” — Honest propaganda conveys truth or sincere opinions without deception, while participatory propaganda aims to build grassroots movements and empowers individuals to contribute. By utilizing honest, participatory propaganda, people can effectively share their ideas and compete with larger entities in the battle for attention.

Stephan Wolfram: “What is ChatGPT doing… and Why Does it Work?” — A very long and detailed essay — but still easy to understand — on what the models behind ChatGPT are doing behind the scenes.

Huge photo archive of buildings under construction (h/t Construction Physics)

Love this.

Just discovered your blog now as you've consolodated. Good timing 😁