This is going to be something a little bit different: a podcast roundup.

For the past few years I’ve been doing a roundup post and essay about once a quarter. This will be an audio version of that. I wanted to try a podcast format where it’s primarily clips from others with me narrating along the way.

Why? It sounded like fun and I love experimenting with how to make content on different mediums. I think it turned out pretty well, although much longer than I initially thought.

Going through all these audio files was a pain, so I wrote a script that transcribed them all and allows me to search by topic and group related content. This was kinda fun to make as well.

Most of this episode is on AI, similar to my last few roundups, but I also did a section on the future of education, and I’ve got some carveouts for other interesting content at the end as well.

The podcast is almost an hour long, you should be able to skip to relevant sections in the description. And if you’re not an audio person, there’s the usual written version below.

01:37 — 🤖 The A.I. Frontier

08:45 — AI: How useful are language models?

14:05 — AI: What does AI allow us to do?

14:33 — AI: Easier to communicate

18:16 — AI: Creativity

25:50 — AI: Augmented Intelligence

33:29 — 🧑🏫 The Education Frontier

43:00 — 🔗 Interesting Content

🤖 A.I. Frontier

In this section about AI, I’m going to first cover some basic fundamentals of how these AI models work, and then really get into their use cases and what they allow us to do.

It’s been 3 years since the original GPT-3 release, 6 months since ChatGPT, and we’ve had GPT-4 and other open source models for more than 3 months now.

So with that said, I want to talk about the current state of GenAI, in particular language models because these have more generalized promise.

Here’s Andrej Karpathy in a talk called “State of GPT” with more on what models are out there and what they can do:

Now since then we've seen an entire evolutionary tree of base models that everyone has trained. Not all of these models are available. For example, the GPT-4 base model was never released. The GPT-4 model that you might be interacting with over API is not a base model, it's an assistant model. And we're going to cover how to get those in a bit. GPT-3 base model is available via the API under the name DaVinci. And GPT-2 base model is available even as weights on our GitHub repo. But currently the best available base model probably is the LLaMA series from Meta, although it is not commercially licensed.

Now one thing to point out is base models are not assistants. They don't want to answer to you, they don't want to make answers to your questions. They just want to complete documents. So if you tell them, write a poem about the bread and cheese, it will answer questions with more questions. It's just completing what it thinks is a document. However, you can prompt them in a specific way for base models that is more likely to work.

A review of what we have now:

Monolithic foundation models from OpenAI, Google, Anthropic, etc.

Assistant models built on top of these like ChatGPT, Bard, and Claude.

Open source models — particularly the semi-open-sourced LLaMA model from Meta, where you can run it locally and even fine-tune it with your own data.

State-of-the-art GPT-4 is actually a “mixture of experts” assistant model that routes your prompt to whichever large model that can complete it best. This is a big difference from the original GPT-3.

In the early days of GPT-3, if you “asked” it a question, it had a high chance of spitting out something rude, offensive, or just didn’t really answer the question. How do they get from the base models to the assistant models like ChatGPT that are pleasant to talk to?

So instead, we have a different path to make actual GPT assistants, not just base model document completers. And so that takes us into supervised fine tuning. So in the supervised fine tuning stage, we are going to collect small but high-quality data sets. And in this case, we're going to ask human contractors to gather data of the form prompt and ideal response. And we're going to collect lots of these, typically tens of thousands or something like that. And then we're going to still do language modeling on this data. So nothing changed algorithmically. We're just swapping out a training set. So it used to be internet documents, which is a high-quantity, low-quality for basically QA prompt response kind of data. And that is low-quantity, high-quality. So we would still do language modeling. And then after training, we get an SFT model. And you can actually deploy these models. And they are actual assistants. And they work to some extent.

The performance of these models seems to have surprised even the engineers that created them. How and why do these models work?

Stephen Wolfram released a great essay about this earlier this year aptly called “What is ChatGPT Doing… and Why Does It Work?” The essay was basically a small book, and Wolfram actually did turn it into a small book. So it’s long, but if you’re interested in these, I’d really recommend it, even if not everything makes sense. He explains models, neural nets, and a bunch of other building blocks. Here’s the gist of what ChatGPT does:

The basic concept of ChatGPT is at some level rather simple. Start from a huge sample of human-created text from the web, books, etc. Then train a neural net to generate text that’s “like this”. And in particular, make it able to start from a “prompt” and then continue with text that’s “like what it’s been trained with”.

As we’ve seen, the actual neural net in ChatGPT is made up of very simple elements—though billions of them. And the basic operation of the neural net is also very simple, consisting essentially of passing input derived from the text it’s generated so far “once through its elements” (without any loops, etc.) for every new word (or part of a word) that it generates.

. . . The specific engineering of ChatGPT has made it quite compelling. But ultimately (at least until it can use outside tools) ChatGPT is “merely” pulling out some “coherent thread of text” from the “statistics of conventional wisdom” that it’s accumulated. But it’s amazing how human-like the results are. And as I’ve discussed, this suggests something that’s at least scientifically very important: that human language (and the patterns of thinking behind it) are somehow simpler and more “law like” in their structure than we thought. ChatGPT has implicitly discovered it. But we can potentially explicitly expose it, with semantic grammar, computational language, etc.

In a YouTube lecture he put out, Wolfram explains that the ChatGPT's ability to generate plausible text is due to its ability to use the known structures of language, specifically the regularity in grammatical syntax.

We know that sentences aren't random jumbles of words. Sentences are made up with nouns in particular places, verbs in particular places, and we can represent that by a parse tree in which we say, here's the whole sentence, there's a noun phrase, a verb phrase, another noun phrase, these are broken down in certain ways. This is the parse tree, and in order for this to be a grammatically correct sentence, there are only certain possible forms of parse tree that correspond to a grammatically correct sentence. So this is a regularity of language that we've known for a couple of thousand years.

How useful are language models?

How useful really are language models, and what are they good and bad at?

I have occasionally used it to summarize super long emails, but I've never used it to write one. I actually summarize documents is something I use it for a lot. It's super good at that. I use it for translation. I use it to learn things.

That was Sam Altman, founder of OpenAI, saying he primarily uses it for summarizing. At another event he admitted that ChatGPT plug-ins haven’t really caught on yet, despite their potential.

What are they good for then? What are the LLM “primitives”— or the base capabilities that they’re actually good at?

The major primitives are: Summarization, text expansion, basic reasoning, semantic search in meaning space. Most of all translation — between human languages, computer languages, and any combination of them. I’ll talk about this in a little bit.

They’re clearly not good at retrieving information, or being a store of data. The same way human brains aren’t very good at this naturally without heavy training. This might never really be a core primitive of generalized LLMs. They are not a database and likely will never be. And that’s ok — they can always be supplemented with vector or structured data sources.

As I mentioned, the context window of a transformer is its working memory. If you can load the working memory with any information that is relevant to the task, the model will work extremely well, because it can immediately access all that memory.

Andrej Karpathy talks here about what LLMs aren’t good at, but also how they can easily be supplemented:

And the emerging recipe there is you take relevant documents, you split them up into chunks, you embed all of them, and you basically get embedding vectors that represent that data. You store that in the vector store, and then at test time, you make some kind of a query to your vector store, and you fetch chunks that might be relevant to your task, and you stuff them into the prompt, and then you generate. So this can work quite well in practice.

So this is I think similar to when you and I solve problems. You can do everything from your memory and transformers have very large and extensive memory, but also it really helps to reference some primary documents. So whenever you find yourself going back to a textbook to find something or whenever you find yourself going back to documentation of a library to look something up, the transformers definitely want to do that too. You have some memory over how some documentation of a library works, but it's much better to look it up. So the same applies here.”

I can attest to this working very well, and nearly eliminating any hallucinations.

Advanced reasoning also isn’t there yet, but it may be in the near future. Other methods for both prompting and training techniques are currently being explored.

I think more generally, a lot of these techniques fall into the bucket of what I would say, recreating our system 2. So you might be familiar with the system 1, system 2 thinking for humans. System 1 is a fast, automatic process. And I think kind of corresponds to like an LLM, just sampling tokens. And system 2 is the slower, deliberate planning sort of part of your brain. And so this is a paper actually from just last week, because this space is pretty quickly evolving. It's called “Tree of Thought”. And in “Tree of Thought”, the authors of this paper propose maintaining multiple completions for any given prompt. And then they are also scoring them along the way and keeping the ones that are going well, if that makes sense. And so a lot of people are really playing around with kind of prompt engineering to basically bring back some of these abilities that we sort of have in our brain for LLMs.

Karpathy mentions “Tree of Thought” as one solution to LLM problem solving. This is one of a bunch of different exploratory efforts going on to hugely improve results without even retraining the base models.

OpenAI also recently said they aligned a model via process supervision, rather than outcome supervision. What this means is that, when training, instead of rewarding it for getting the “right” answer they reward it for thinking through the steps of the problem itself, called “chain-of-thought” reasoning. It seems to me that models like this will really allow future GPT versions to do more advanced reasoning.

And if you pay for ChatGPT Pro, as of last week you now have access to Code Interpreter. This is being described by some as GPT-4.5 because of its ability to write and troubleshoot code to solve problems. (It’s seriously good — try if you can!)

What does AI allow us to do?

Abilities fall into 3 major categories for me:

They make us smarter.

They make us more creative.

They make it much easier to communicate with computers and other people.

3. Easier to communicate

Language models act as a translation or mapping layer between humans and machines. So if I describe something in English to an LLM it could translate to Mandarin so someone in China could understand, Python so it can be executed as code, or be reworded in English so a 5 year old gets it.

Part of this “mapping” is routing tasks to the right tools, where we then can use them and have finer UI controls. In a recent New Yorker article, the writer talked about AI making computers less rigid:

Many of the uses of A.I. that I like rest on advantages we gain when computers get less rigid. Digital stuff as we have known it has a brittle quality that forces people to conform to it, rather than assess it. We’ve all endured the agony of watching some poor soul at a doctor’s office struggle to do the expected thing on a front-desk screen. The face contorts; humanity is undermined. The need to conform to digital designs has created an ambient expectation of human subservience. A positive spin on A.I. is that it might spell the end of this torture, if we use it well. We can now imagine a Web site that reformulates itself on the fly for someone who is color-blind, say, or a site that tailors itself to someone’s particular cognitive abilities and styles. A humanist like me wants people to have more control, rather than be overly influenced or guided by technology. Flexibility may give us back some agency.

Design researcher Jakob Nielsen, the business partner of Don Norman (of “Design of Everyday Things” fame) wrote a short post recently on how generative AI is introducing the third user-interface paradigm in computing history. Unlike the current paradigm of command-based interaction design, where users issue commands to the computer one at a time to produce a desired outcome, users now tell the computer directly what they want.

With the new AI systems, the user no longer tells the computer what to do. Rather, the user tells the computer what outcome they want. Thus, the third UI paradigm, represented by current generative AI, is intent-based outcome specification. . . . the user tells the computer the desired result but does not specify how this outcome should be accomplished. Compared to traditional command-based interaction, this paradigm completely reverses the locus of control.

But are we destined to only be interacting with these AI models through speaking or writing? Hopefully not, as I discussed in my last essay. Nielsen continues:

The current chat-based interaction style also suffers from requiring users to write out their problems as prose text. Based on recent literacy research, I deem it likely that half the population in rich countries is not articulate enough to get good results from one of the current AI bots. That said, the AI user interface represents a different paradigm of the interaction between humans and computers — a paradigm that holds much promise. . . . Future AI systems will likely have a hybrid user interface that combines elements of both intent-based and command-based interfaces while still retaining many GUI elements.

2. The Creativity Frontier

The second ability is that AI makes us more creative. It turn us into explorers of possibility space.

What do I mean by possibility space? You’ll also see it called meaning space or latent space, which for these purposes are interchangeable.

It can be a complicated topic to explain, especially without any visual aids. I’ll put a few links in the footnotes, and eventually I hope to write a post specifically about this. Here’s a passage from Stephen Wolfram’s “What is ChatGPT Doing?” essay:

One can think of an embedding as a way to try to represent the “essence” of something by an array of numbers—with the property that “nearby things” are represented by nearby numbers.

And so, for example, we can think of a word embedding as trying to lay out words in a kind of “meaning space” in which words that are somehow “nearby in meaning” appear nearby in the embedding.

. . . Roughly the idea is to look at large amounts of text (here 5 billion words from the web) and then see “how similar” the “environments” are in which different words appear. So, for example, “alligator” and “crocodile” will often appear almost interchangeably in otherwise similar sentences, and that means they’ll be placed nearby in the embedding. But “turnip” and “eagle” won’t tend to appear in otherwise similar sentences, so they’ll be placed far apart in the embedding.

Everyone is familiar with seeing data represented on a 2 dimensional chart with Y and X axes. That’s all these “embeddings” are — coordinates in a space, but rather than 2 dimensions, they have thousands. So we can’t really visualize them easily. Humans can’t see more than 3 dimensions so we have to just take a slice of them in 2D or 3D (or otherwise reduce the dimensionality).

In this meaning space, as Wolfram said, similar things are closer together. It works the same way in the text and art models. Alligator and crocodile are close in word space. In image space, an apple and an orange are close together, just as the color red would be close to roses.

What ChatGPT and Midjourney are doing is allowing us to “navigate” this space by using writing and text to explore it. Language models complete the text by finding higher probability combinations nearby your prompt. Art models associate your text prompt with nearby imagery in the space.

Daniel Gross put it similarly in an interview last year with Ben Thompson:

The models are just roaming through this embedded space they’ve created looking for things that are near each other in this multi-dimensional world. And sometimes the statistically most-next-likely thing isn’t the thing we as humans sort of agree on is truth.

He was referring to the negative side here of course, of hallucinations. But what’s a negative for discovering facts actually helps us be more creative.

One of my favorite authors, Kevin Kelly, has a new book out and has been making the podcast rounds recently. Here he is on The Tim Ferriss Show talking about how he uses MidJourney:

It’s like photography. I feel I have some of the same kind of a stance that I have and I’m photographing, I’m kind of hunting, searching through it, I’m trying to find a good position, a good area where there’s kind of promise. And I’m moving around and I’m trying, and I’m whispering to the AI, how about this? I’m changing the word order. I’m actually interacting, having a conversation with it over time. And it might take a half hour or more to get an image that I am happy with. And I’m at that point, very comfortable in putting my name as a co-creator of it because I have, me and the intern have worked together to make this thing.

This is exactly what Kevin is doing. The MidJourney model is generating the image for him, but mostly it’s helping him explore possibility space until he finds something he likes.

Here he is in another interview on Liberty’s Highlights talking about how these models make us more creative and add leverage to our capabilities:

Yeah, the way you think of the kind of the prompters, which are the new artists, is that they’re like directors of a film. They’re commanding a whole bunch of different entities and agencies that do the craft. And their craft is orchestrating creative ideas and they have crafts people who do the cinematography and do the music, the score and everything. And they're kind of curating or directing the thing, or a producer in music. So we now have that kind of on images. And the important thing is that enables many more people to participate in creativity. And I say we have synthetic creativity. These engines are absolutely 100% lowercase creative. They are creative. There’s no getting around it. We can’t deny it. But it's a lowercase, small “c”, creativity. It's not the uppercase creativity that we have, like with a Guernica painting or Picasso or something. So the idea is that those we can't synthesize yet, maybe someday, but not right now. And so that lowercase creativity is synthesized and it's available to people. And that’s a huge innovation.

So this exploration of possibility space is a key feature of generative AI models.

At the same time, it’s pretty clear to me that we need a better way to navigate this space. Right now we’re doing it very haphazardly using trial and error with text prompting. But this doesn’t have to be the case.

Just like a geneticist discovering which genes code for certain traits (maybe it’s a few genes for eye color, or a lot for personality traits), we can discover which neurons and dimensions represent certain areas of meaning space.

This is why further research on how LLMs work is so useful.

If we can tweak parameters that represent attributes like humor, verbosity, complexity of writing… then we can manually adjust them like on a dashboard. You could increase the humor of your writing by 10% and decrease the length by 5%. Same goes for art models. How cool would it be to have slider adjustments for realism, color, detail, mood, or whatever?

I could keep going, but I’ll save it for another time.

1. AI = “Augmented Intelligence”

Rather than Artificial Intelligence, it’s better to think of AI as “Augmented Intelligence”. More than anything, it acts as a multiplier of our own intelligence — just like computers did decades ago, but much more so.

This is Marc Andreessen on a recent episode of Lex Fridman’s podcast talking about the benefits that AI will bring:

Marc: And certainly at the collective level, we could talk about the collective effect of just having more intelligence in the world, which will have very big payoff. But there's also just at the individual level, like what if every person has a machine, and it's the concept of augment —Doug Engelbart's concept of augmentation. What if everybody has a an assistant, and the assistant is 140 IQ, and you happen to be 110 IQ. And you've got something that basically is infinitely patient and knows everything about you, and is pulling for you in every possible way — wants you to be successful. And anytime you find anything confusing, or want to learn anything, or have trouble understanding something, or want to figure out what to do in a situation — right, when I figure out how to prepare for a job interview, like any of these things, like it will help you do it. And it will therefore — the combination will effectively be — because it will effectively raise your IQ will therefore raise the odds of successful life outcomes in all these areas.

Lex: So people below the hypothetical 140 IQ, it’ll pull them off towards 140 IQ.

Marc: And then of course, people at 140 IQ will be able to have a peer, right, to be able to communicate, which is great. And then people above 140 IQ will have an assistant that they can farm things out to.

Packy McCormick wrote a few good essays on this topic recently. In “Intelligence Superabundance” he addresses the concern that AI will steal our jobs and obsolete humans:

. . . the increased supply of intelligence will create more demand for tasks that require intelligence, that we’ll turn gains in intelligence efficiency into ways of doing new things that weren’t previously feasible.

. . . We can examine it a little more rigorously with a little mental flip. Instead of thinking about AI as something separate from human intelligence, we should think of it as an increase in the overall supply of intelligence. . . . Then the important question becomes not “Will I lose my job?” but “What would I do with superabundant intelligence?”

Essentially, demand for intelligence is very elastic. Meaning when either the price goes down or the supply goes up, the demand goes up a lot to match it. If AIs give us more intelligence, our demand and uses for it will increase.

Packy writes more in another essay called “Evolving Minds”:

In that case, I don’t think the framing of AI as simply a tool that we’ll use to do things for us is quite right. I think we’ll use what we learn building AI to better understand how our minds work, define the things that humans are uniquely capable of, and use AI as part of a basket of techniques we use to enhance our uniquely human abilities.

Even as AI gets smarter, it will fuel us to get smarter and more creative, too. Chess and Go provide useful case studies.

He goes on to write about how AI became much better than us at chess and Go, but instead of giving up, humans got better — and more creative — as well:

Some people will be happy letting AI do the grunt work. Not everyone will see AI’s advance as a challenge to meet, just as I have not taken advantage of chess and Go engines to become better at either of those games. But I think, as in chess and Go, more people will get smarter. The process will produce more geniuses, broadly defined, ready to deliver the fresh, human insights required to ignite the next intellectual revolution.

If we can walk the line, the result, I think, will be a deeper understanding of the universe and ourselves, and a meaningful next leg in the neverending quest to understand and shape our realities.

When we think about this augmented intelligence, it’s not necessarily just “ChatGPT” or some other generic AI. Future versions will be very much customized. Not only will they have your data, but they’ll share your system of values. This is Sam Altman on this topic from an interview early this year:

. . . the future I would like to see is where access to AI is super democratized, where there are several AGIs in the world that can kind of help allow for multiple viewpoints and not have any one get too powerful, and that the cost of intelligence and energy, because it gets commoditized, trends down and down and down, and the massive surplus there, access to the systems, eventually governance of the systems, benefits all of us.

. . . We now have these language models that can understand language, and so we can say, hey model here's what we'd like you to do, here are the values we'd like you to align to. And we don't have it working perfectly yet, but it works a little and it'll get better and better.

And the world can say all right, here are the rules, here's the very broad-bounds absolute rules of a system. But within that people should be allowed very different things that they want their AI to do. And so if you want the super never offend safe for work model you should get that. And if you want an edgier one that is sort of creative and exploratory but says some stuff you might not be comfortable with or some people might not be comfortable with, you should get that.

So this all sounds great, but if base-level intelligence is commoditized, how can humans stand out and differentiate? I think there’s a lot of answers to this — some of them maybe even philosophical. I’ll leave those for another time, and just leave you with a clip from Liberty’s interview with Kevin Kelly:

Liberty: And in the book that would be compressed as don't be the best, be the only, this reminds me of — Naval has a way of saying it, which is “escape competition through authenticity”, right? If someone else is doing the exact same thing, well, maybe a robot can do it. Right?

Kevin: Exactly. That's another piece of advice in the book is that if your views on one matter can be predicted from your views on another matter, you should examine yourself because you may be in a grip of an ideologue. And what you actually want is you actually want to have a life and views that are not predictable by your previous ones. And the advantage there is that you are going to be less replaceable by an AI.

Liberty: Yeah. If everybody's thinking the same, a lot of people are not thinking in there.

Kevin: Well, but the AIs are auto-complete. They're just kind of auto-completing. And if you're easy to auto-complete, you're easy to be replaced.

Liberty: That's a good way to put it. Optimize for being hard to auto-complete.

🧑🏫 Education Frontier

Education — specifically K-12 education — has a lot of room for improvement. I’ve written a few things about the future of education many years ago, but haven’t really talked about it much. With a 2-year-old now, I follow it a little closer so I want to share some interesting content I’ve seen recently.

Ryan Delk founded a startup called Primer that’s working to build a new education system in the US by helping teachers launch their own micro-schools with 10-25 students that provide more personalized education without all the bureaucracy that comes with big school systems.

He was interviewed recently on a podcast called The Deep End, and here is is giving some more detail on what they do:

So the micro schools today that we have are, they're all led by one teacher as sort of the main micro school leader. Depending on the number of kids, this is part of what's really cool about it is not only can teachers make way more money than they do in the traditional system. So on average, our teachers probably make 40% to 50% more than what they'd make in the existing districts that they teach in. But they also can get an aid to help them out in their class.

And so at a certain threshold, they unlock an aid that will help them and can also sort of assist with different subjects and stuff. So you have one micro-school leader. For elementary, as you mentioned, it's very standard in the US that an elementary teacher teaches all subjects. And at some middle schools or a lot of middle schools, sometimes that's also true, where one teacher's teaching subjects. And so we use that model.

Right now, most of our kids are in elementary, third through fifth, third through sixth grade. And so that's the current model. And then how we augment that is with online, what we call them as subject matter experts. And so we have, for example, a really exceptional ELA and a really exceptional reading teacher, really exceptional math teachers. And so for specific subjects where kids, whether it's that they need remedial help, or they're advanced and they need more support, and the micro school leader can't support them directly or just isn't set up to support them directly because of the structure of the class or whatever, kids have these resources.

This brings us to the question of — what is school actually providing to students?

I think a lot of people that are in the education sort of startup space have this sort of like, oh, we're going to take over the world and disrupt everything. And, you know, we need to blow up the whole existing system. My view on it is maybe a bit more nuanced than that. And I think that the public school system in the U.S. right now, I think it serves like three very distinct functions. And we've bundled all that into one name, which is like public schools.

Everyone thinks about the academic component. Hey, we're sort of this is the engine that is responsible for the academic development of the children of the U.S. And that's certainly one component of it. And we could debate how effective it is at that. But another component that's sort of the two other components I think are maybe equally important societally, but are very under discussed.

One is that for millions of kids, it's actually basically the way that they're able to get food and have food security each day. And we sort of totally dismiss this, but it's actually, I think, a very important and very sad and unfortunate sort of component of public schools. You can do the numbers and the research on this, and it will blow your mind.

And the second component, the third component is that, you know, most parents are working and they need a place for their kids to go from you know eight to, with aftercare, eight to five every day so they can work. And so there's this babysitting component of school that's very important.

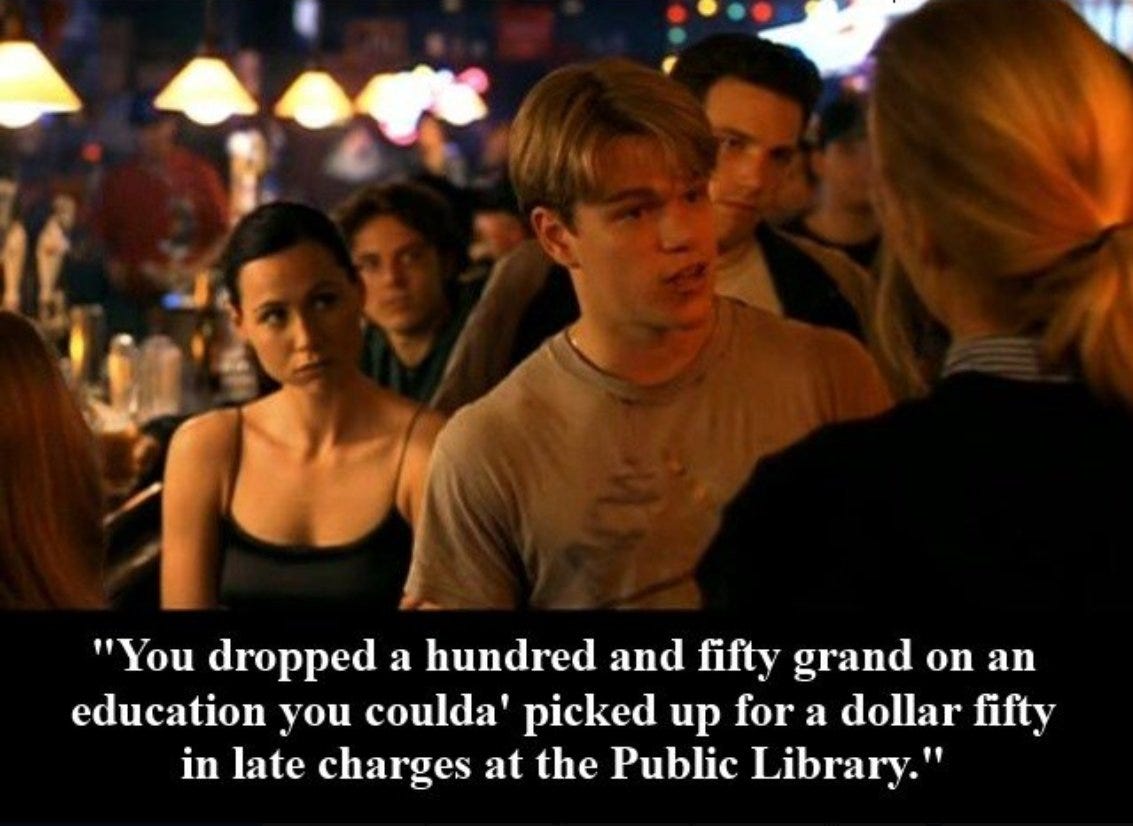

This reminds me of the famous Good Will Hunting scene (and meme):

As we all know, especially with the internet, you can learn everything they teach you at Harvard yourself. But obviously that’s not the real reason why people want to go to Harvard. Just like universities, all school is a bundle of services:

Learning

Daycare / babysitting — even if a computer could handle 100% of education, you wouldn’t leave a 7 year old alone all day while you work.

Social connections

Tutoring

Working with others

And as Ryan mentioned, providing food.

So it’s pretty clear we’re going to have somewhat of an “unbundling” of these services over time. Into what, I don’t know. What I do know is that AI will play a bigger and bigger role going forward.

Sal Khan did a TED talk earlier this year where he talked about The 2 Sigma Problem, a study from the 1980s where if you give one-on-one tutoring to students, you can improve their outcomes by 2 standard deviations. This takes an average student and turns them into an exceptional student. The problem here of course is that this is very hard to scale.

Enter AI. Khan Academy has a new feature called Khanmigo that integrates GPT-4:

This is really going to be a super tutor. And it's not just exercises. It understands what you're watching. It understands the context of your video. It can answer the age-old question, why do I need to learn this? And it asks, socratically, well, what do you care about? And let's say the student says, I want to be a professional athlete. And it says, well, learning about the size of cells, which is what this video is about, is really useful for understanding nutrition and how your body works, et cetera. It can answer questions, it can quiz you, it can connect it to other ideas. You can now ask as many questions of a video as you could ever dream of.

So another big shortage out there, I remember the high school I went to, the student-to-guidance counselor ratio was about 200 or 300 to one. In a lot of the country, it's worse than that. We can use Khanmigo to give every student a guidance counselor, academic coach, career coach, life coach, which is exactly what you see right over here.

With this, students can get truly custom learning experiences. That’s pretty awesome to me because in school there can be more hands-on activities and applications. Things that are hard to beat on a computer.

In an interview on Jim O’Shaughnessy’s podcast “Infinite Loops”, Ethan Mollick has a great bit about this:

So, I think in much of the world, we're going to see a real transformation of teaching, where teaching is not high quality right now and we're going to see a leap into another model.

But in the US, in Europe and probably in China, Japan, other places with that already have fairly highly developed educational systems, I think the future looks like one where you are going to still go to school as you did before, during the same class period times, but you're going to be basically... The easiest version to think about, is you're going to be doing a much more engaging version of homework in school. Project-based learning, experiences, building stuff, trying to create things, trying to apply things. Outside of school you're going, your homework assignments are going to be monitored by AI and you'll be working with an AI tutor that will generate lessons for you and teach you concepts, and make sure you're on track and will let the teacher know "The person's struggling at this, despite this," and will help the teacher set up teams so that you don't have those horrible team assignments and track grades. So, I think that there's a model that we already know works. We'll still give lectures. It's like 5,000 years old, giving lectures, and we know that it doesn’t work, but we keep doing them. So, it's going to force us to shake ourselves out of a bunch of stuff that doesn't work well. I'm sure there'll still be some classes that look like classes. I think English composition will still look like English composition. I think math is still going to look a lot like math, but I think a lot of your other projects will be more...

I’ll finish this section with another clip from Ryan Delk on blending the virtual and real-world experiences:

So I think of it as the best possible experience is absolutely a best teacher in person in the smallest possible environment, meaning the fewest amount of students to the teacher ratio. That's the best. That's by far the best situation that you could get any kid into. But that's sort of like a pipe dream for most kids in the US right now, that you're just not going to have this amazing world-class teacher that can sit down with 12 kids and go deep about some specific thing you're excited about. So I think I agree that that's the optimal scenario.

I'm not one of these people that thinks virtual is inherently better. But I do think that virtual with a world-class teacher, especially if you can build interesting product experiences, which we're building on top of the virtual experience, when needed, and accompanying an in-person teacher, is better than a sort of average in-person experience.

🔗 Interesting Content

Recent content I’ve enjoyed and think you might also.

David Senra on Steve Jobs

One of my favorite recent episodes of The Founders podcast was where David talked about the recently released book “Make Something Wonderful” which is a selection of Steve Jobs’ writings.

That is what your life is. Think of your life as a rainbow. This is again, maybe my favorite quote in the entire book. Think of your life as a rainbow arcing across the horizon of this world. You appear, have a chance to blaze in the sky, and then you disappear. What is he saying? Go for it like your life depends on it. Why? Because it does. It literally does. The two endpoints of everyone's rainbow. This is, this is again, I think one of the, he's got a ton of great ideas, right? But something you'll, if you study Steve, what he says over and over again, it's like if you need to use your inevitable death as a tool to make decisions on how you're going to spend your time, the two endpoints of everyone's rainbow are birth and death. We all experience both completely alone. And yet most people of your age have not thought about these events very much, much less even seen them in others.

How many of you have seen the birth of another human? It's a miracle. And how many of you have witnessed the death of a human? It is a mystery beyond our comprehension. No human alive knows what happens to us after our death. Some believe this, others that, but no one really knows. Again, most people your age have not thought about these events very much. It's as if we shelter you from them, afraid that the thought of mortality will somehow wound you. For me, it's the opposite. To know my arc will fall makes me want to blaze while I'm in the sky. Not for others, but for myself, for the trail that I know that I'm leaving. And I love the way he ends his speech. Now, as you live your arc across the sky, you want to have as few regrets as possible. Remember, regrets are different from mistakes.

Lots of good wisdom in this one, so make sure to check it out. I’ll call out his just-released one on Arnold Schwarzenegger as well, which is a good compliment to the Netflix documentary I highly recommend.

Thomas Edison’s Lab

Eric Gilliam wrote a great essay for Works in Progress on Thomas Edison. Highly recommend this and I got a lot out of it. He also did a companion solo podcast called “Tales of Edison’s Lab”. Edison was one of those once-in-a-century type people that to me really just fits the American “man in the arena” prototype.

In a field full of uncertain potentials, he often did seem to proceed to his triumphs by a certain kind of laborious sorcery, ill prepared in mathematics, disrespectful of the boundaries set by available theory. He took on all the forces of nature with a combination, as he said, rather unfairly about himself, of 2% inspiration and 98% hard work. The inspiration was informed by years of practice and shrewd observation. The hard work was simply amazing. And if the means by which he brought off his extraordinary efforts are not wholly clear, neither is the cause for his obsessive labors, no diver into nature's deepest mysteries, caring next to nothing for the advancement of knowledge and even less for the world's goods. He would become absorbed in making something work well enough to make money. The test in the marketplace was for him, apparently, the moment of truth for his experiments. And once this was passed, he became forthwith, absorbed in making something else. By the time of Edison's foray into lighting, he was a commercially successful inventor many times over.

Acquired on Lockheed Martin

Ben and David from the Acquired Podcast recently did an episode on Lockheed .

It’s a 3+ hour episode so there’s a lot there. They cover Kelly Johnson’s creation of Lockheed’s famous Skunkworks division:

So in this circus tent in a parking lot, Kelly and this super elite team from Lockheed build the first prototype US fighter jet named the Lulu Bell in 143 days start to finish. This is just wild. For years the US had been working on this technology and they hadn't gotten it operationalized. The Germans beat them to it and then in 143 days, Kelly and Lockheed go from zero to flying prototype. What a testament to him and to this organization in the circus tent that he has built, the Skunkworks.

I used Skunkworks as an example a few times in my essay and podcast from last year, Take the Iterative Path on how Skunkworks and SpaceX both use iterative design to build so quickly. So check that out if that interests you.

On Acquired they also talk about Lockheed’s space and missile division, which I had actually never heard of. And apparently it did pretty well for them:

Well, I think at times in the 60s and 70s and 80s, LMSC was the largest business by revenue. But almost through the whole time, it was by far the most profitable division within Lockheed. And at times, when we'll get into, Lockheed fell on some really hard times in the 70s. There were years where LMSC generated more than 100% of the profits of Lockheed. So all of the rest of Lockheed, Skunkworks included, was in the red. Unprofitable, bleeding money, and LMSC was keeping the company afloat.

. . .This is much more technology problems and computing problems that LMSC is tackling here. Yes, they're building missiles. Yes, they're building rockets and all that. But the core value components of those rockets is computing and silicon and ultimately software. And as we talk about all the time on this show, like, well, that's really good margins. Definitely better margins than building airplanes.

Apple Vision Pro

There’s been lots of good content out — from testers and analysts — about Apple’s Vision Pro mixed reality device.

As usual, Ben Thompson had a good writeup and was really impressed with the half-hour demo he got:

As I noted above, I have been relatively optimistic about VR, in part because I believe the most compelling use case is for work. First, if a device actually makes someone more productive, it is far easier to justify the cost. Second, while it is a barrier to actually put on a headset — to go back to my VR/AR framing above, a headset is a destination device — work is a destination.

. . . Again, I’m already biased on this point, given both my prediction and personal workflow, but if the Vision Pro is a success, I think that an important part of its market will to at first be used alongside a Mac, and as the native app ecosystem develops, to be used in place of one. To put it even more strongly, the Vision Pro is, I suspect, the future of the Mac.

I agree with Ben’s belief that Vision is ultimately set up to replace some segment of the Mac’s jobs-to-be-done, and ultimately become an amazing productivity tool.

Just as websites on smartphones were originally the same desktop version, Apple Vision shows users interacting with several 2D screens floating in space. This alone can be a huge productivity boost, as evident by the boost you get from multiple monitors today.

But the real boost will come when the mixed reality interface breaks out of the past paradigm and you can interact with both the physical world and an infinite digital canvas. Andy Matuschak wrote about this following the announcement, talking about ideas like having apps or files live in physical space. Like a timer above your stove, or the Instacart app in your fridge.

It will be interesting to see what kinds of stories and experiences come out of the mixed reality world. Steven Johnson, who’s one of my favorite writers, did an interview on VR and AR right before the Vision Pro announcement. One of my favorite parts was when he talks about the history of immersive experiences:

There's this wonderful period, I don't know, like 250 years ago, where there was this amazing explosion of immersive, I call them kind of palaces of illusion. And they were everything, you know, there's a wonderful example of like the panorama, which was the 360 degree painting where you would come in and see a famous military battle reenacted or a skyline view of London and things like that. And there was just a huge number of experiments that happened back then, where they would try all these different configurations of ways to like, create the illusion of being in some other world.

Interestingly, they were not narrative environments, they really didn't tell a story. It was just the kind of like the body, the sensory feeling of, oh, I'm in this like other world, and I can occupy that world for a bit of time and then go back into reality. And that there was just, again, a lot of mucking around here for, you know, figuring out ways to kind of captivate people.

And it was kind of like a Cambrian explosion of all these different possibilities that then eventually consolidated basically into the cinema, right? There are all these different ways of creating the illusion that they're off in some other world. And then we kind of settled on this one approach and all those other experiments died off, basically. And the other thing that happened in that period is directly related to VR and AR is the invention of the stereoscope, which was one of these great cases where it was a scientific innovation about stereo vision.

That’s all for now — I hope you learned something interesting from this experiment!